The process could not execute ‘sp_replcmds’

SQL Server Transaction LogReader Agent timeout executing sp_replcmds occurs most often when a large transaction(s) are written to the Published database transaction log, or 100s of millions of un-replicated commands from logged index maintenance or changes to replicated tables. Either problem requires the LogReader to read more log entries than the timeout parameter allows causing the “query timeout expired” while executing sp_replcmds.

Status: 2, code: 20011, text: 'The process could not execute 'sp_replcmds' on 'MYSQLPUBLISHER'.'. The process could not execute 'sp_replcmds' on 'MYSQLPUBLISHER'. Status: 2, code: 0, text: 'Query timeout expired'. Status: 2, code: 22037, text: 'The process could not execute 'sp_replcmds' on 'MYSQLPUBLISHER'.'. The agent failed with a 'Retry' status. Try to run the agent at a later time

Use these commands below to explore the transaction log to see if your log file matches either of these patterns.

- Troubleshooting LogReader Timeout executing sp_replcmds via SQL Server Management Studio query

- Find last distributed and “next to be” distribute transactions.

Examine the Transaction Log

USE published_database

dbcc opentran

Replicated Transaction

Information:

Oldest distributed LSN : (54:4986:1)

Oldest non-distributed LSN: (55:4803:2)

--Count number of pending to replicated log entries per transaction

select [Transaction ID], count([Transaction ID]) as 'Count log entries'

from::fn_dblog('55:4803:2',null) -- Oldest non-distributed LSN (55:4803:2)

where [Description] = 'REPLICATE'

group by [Transaction ID]

GO

Transaction ID Count log entries -------------- ----------------- 0000:0001a659 3 0000:0001a935 2 0000:0001a658 500001 0000:0003deff 3 0000:0001a937 3 0000:0001a939 3

Is the pattern of cmds/transaction what you expect for your application?

Is there an unexpected single large batch of log entries?

Simulating LogReader

For diagnostic troubleshooting we can simulate the Log Reader functionality by executing a stored procedure against published database to returns pending data from transaction log. Like Log Reader agent, the SSMS query will make a connection to SQL Server, ask SQL engine to scan the published database transaction log and return undelivered transactions. Learning how long SQL Server query will take to returning next 1 pending transaction, next 10 or next 500, is direct indicator of how long Log Reader agent processes those same undelivered transactions.

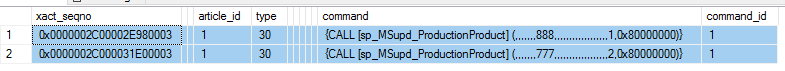

Trace on Publisher shows LogReader executing sp_replcmds, however, sp_replshowcmds automatically converts binary command column to readable text, more useful when troubleshooting. Before executing stop the Log Reader agent job.

Use published_database GO sp_replflush go sp_replshowcmds 2 go sp_replflush go

Did you remember to run the command on the Published database?

In this example, if sp_replshowcmds query took more than 30 minutes to return 2 rows, most likely the Log Reader is scanning gigs of transaction log entries to find these 2 transactions.

- Transaction log contains large amount of unreplicated transaction to be skipped

- Transaction was “open” for long time filling the log with multiple log entered between the BEGIN_TRAN and COMMIT_TRAN log entries

- Read performance problem on the storage subsystem

First Steps to Resolve

If a large number of cmds/transaction occur, increasing the –QueryTimeOut (default 1800 seconds\30 minutes) for the Log Reader is recommended solution. Add –QueryTimeOut parameter directly to the Log Reader Agent Job or to Log Reader agent custom profile in Replication Monitor. This gives Log Reader additional time to complete scans required to process the pending batch of un-replicated changes.

For short-term monitoring, set Log Reader agent ReadBatchSize = 1 and VerboseHistory = 2, having Log Reader agent processes 1 transaction at a time. While this will not speed up the transfer processes, the agent will log progress messages after each transaction v. each batch of 500 transactions. As soon as you’re comfortable the Agent is moving transactions to the Distributor, set ReadBatchSize back to previous setting and restart Log Reader agent.

For a single transaction with 100K commands, the LogReader history may show “waiting for response”. You can monitor process in SQL Management Studio Activity Monitor or running sp_who2 and look for ProgramName = <log reader job> and see if the CPU, and IO counters continue to climb. If these counters are increasing, the LogReader is still processing the transaction log. Using Windows Performance Monitor you may notice READ activity on the drive containing the databases transaction log. Another indication Log Reader log scan activity is progressing.

Be careful when using diagnostic parameters like “-Output c:\temp\logreader.out -Outputverboselevel 3”. These are great for initial troubleshooting, but once you’ve narrowed down the issue, remove these and restart the Agent. Keeping these parameters enabled will generated huge amounts of detailed entries into the output file causing significant slowdown in the Log Reader throughput.

For a quick check on Log Reader agent progress execute sp_replcounters in SSMS query.

Remember to set Agent back to default setting once it has processed the large batch.

Replication Log Reader Agent

https://docs.microsoft.com/en-us/sql/relational-databases/replication/agents/replication-log-reader-agent

Using “Verbose history agent profile.” while troubleshooting Replication

https://repltalk.com/2010/07/13/using-verbose-history-agent-profile-while-troubleshooting-replication/